HData AI Librarian

Application Description

HData's AI Librarian is an assistive aid for research projects on energy forms, filings, and documents from various regulators and interactions between energy companies and regulators. It is designed to answer any questions one may have on any document within the HData Library and give a concise and accurate answer.

- Industry: All

- Sub-Industry: All

- Data Timeline: NA

- Jurisdiction: Federal Forms and documents (state forms and documents coming soon)

Data Sources

Federal

- Federal Energy Regulatory Commission (FERC)

- Financials Forms 1, 2, 6, 60 annual and quarterly

- Dockets (coming soon)

- Securities and Exchange Commission (SEC)

- 10K and 10Q from energy companies (coming soon)

State

- Dockets (Coming soon)

- Compliance Filings (Coming Soon)

AI Librarian - How to Start

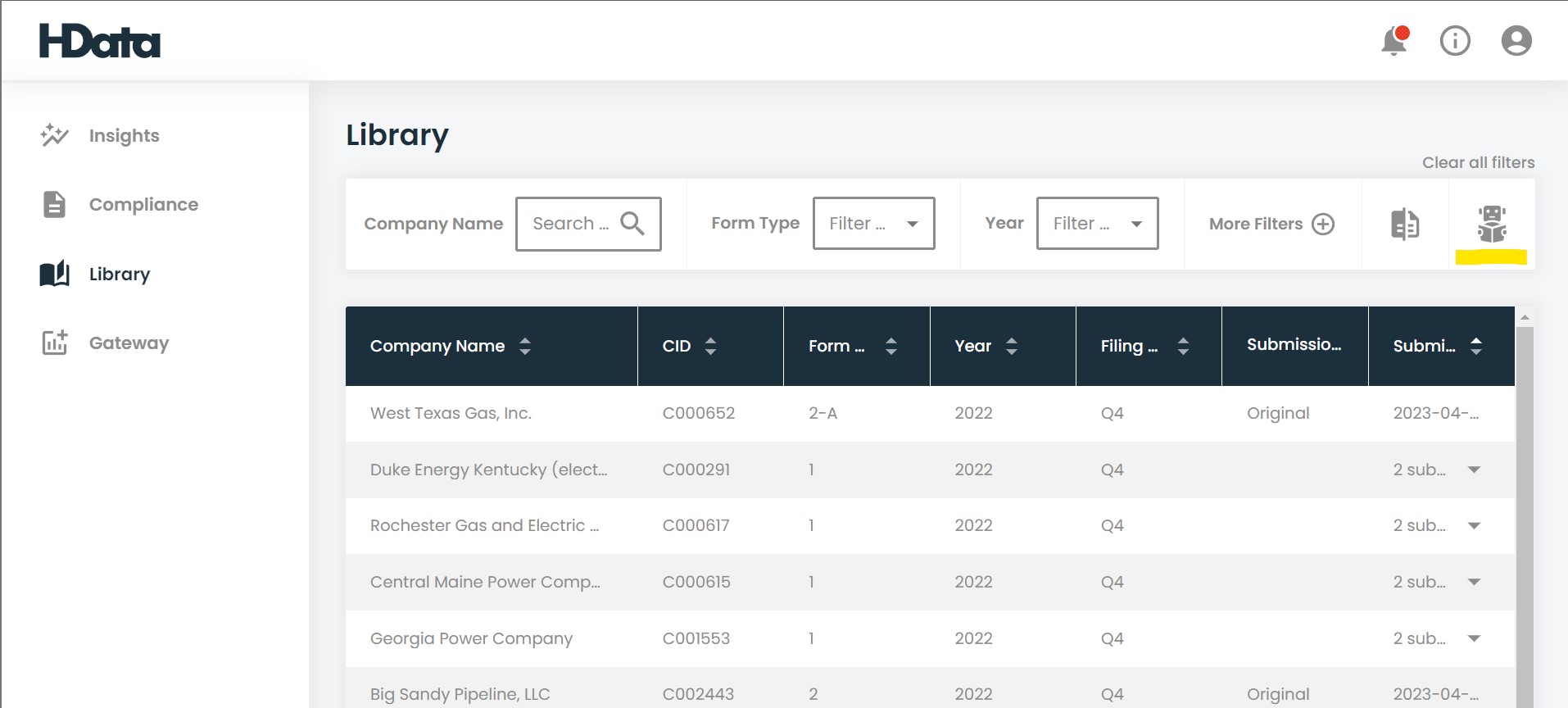

The HData AI Librarian lives inside the HData Library which can be accessed via the Library link on the left-hand navigation pane. You can start a session with the Librarian by clicking on the robot icon on the right end of the top filter bar, highlighted above.

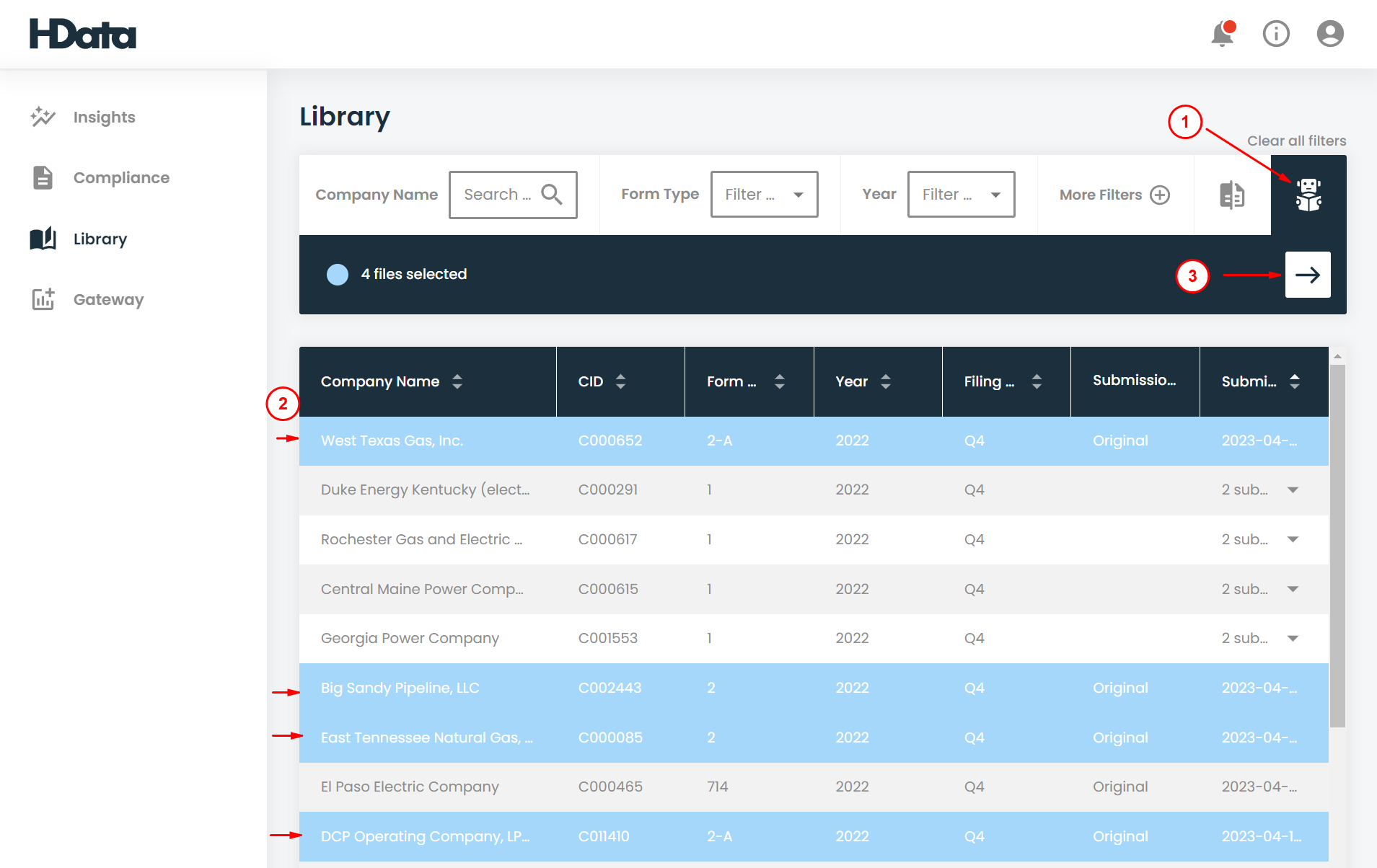

Clicking on the robot will bring you to a screen to select the documents you wish to know more about (location 1 in the screenshot above). You must select at least one document before you can start your session with the Librarian. You can utilize the filters at the top of the screen to narrow down the results to assist in your search (for example, limiting files by year or industry). Once you have selected the document(s), click on the arrow highlighted by location 3 in the screenshot above to start your session with the Librarian. Please note that the more documents you select, the longer it may take for the system to give a response. This is also dependent on the question you ask where a complex question on many documents will take longer than a simple question with a single document. For more information on how to ask the right question, please consult the "tips and tricks" section of this document.

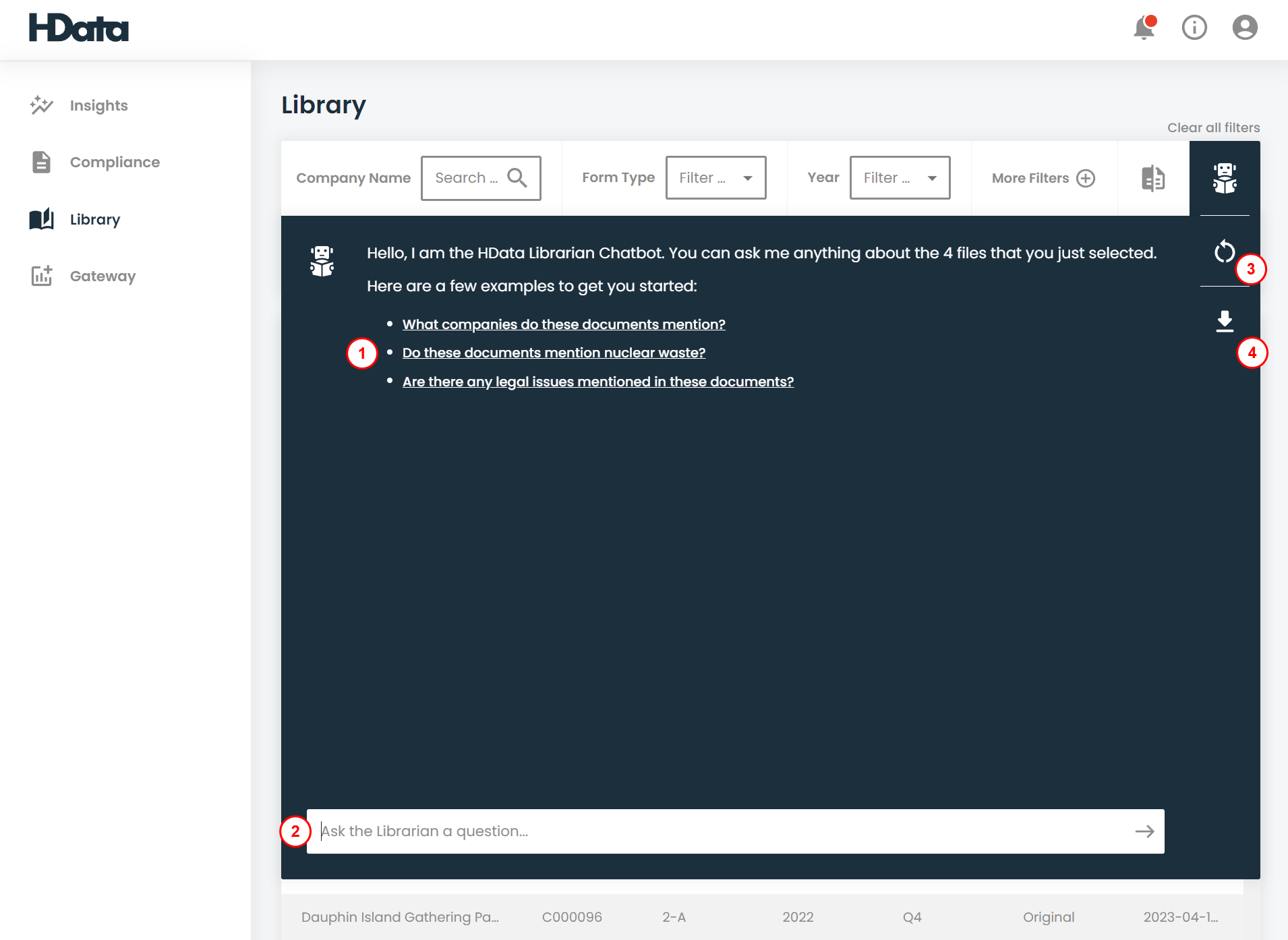

Clicking the robot highlighted at arrow 1 will bring you to a screen where you can either select a pre-built question to start (location 1 in the screenshot above) or type your own question in the text bar at the bottom of the screen (location 2). Think of the Librarian as your research assistant. You can ask questions, make requests, or ask for followup or clarification on any responses the Librarian gives you. If you have used ChatGPT before, the interaction with the HData Librarian will feel very similar. Upon completion of your conversation, you can either refresh the session via the icon at location 3 in the screenshot, or you can download your conversation history with the Librarian via the download icon at location 4.

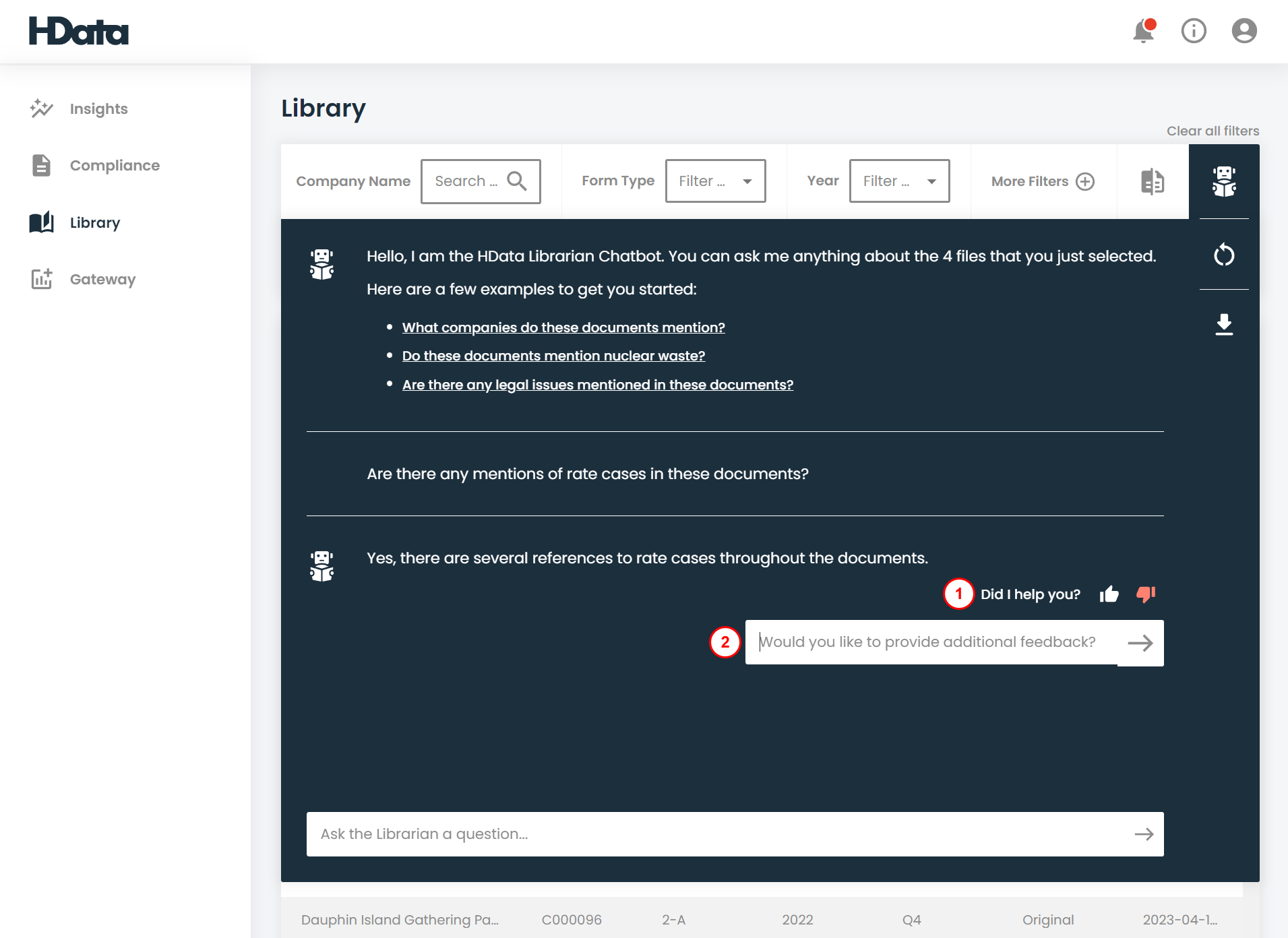

Sometimes, the Librarian may give a response that is not ideal, incorrect, or doesn't provide enough context. This happens in any AI model and the best way to help is to provide feedback. Our AI model is always learning and improving, so an answer one day may be slightly different, and ideally better, the next day. You can provide feedback on any answer via the thumb-up or thumb-down icons at location 1 in the screenshot above. A thumb-up means that the response you received is great and helped answer your question accurately. A thumb-down means the response was not great. If you select the thumb-down, you will be given an option to enter additional information. We highly encourage you to utilize these thumb-up and thumb-down icons. A thumb-up "rewards" the system, informing it that responses like the one it gave you are in line with expectations and the Librarian will try and repeat the answer if asked a similar question by other users. A thumb-down will tell the system that it needs to improve, and any additional feedback via the text bar will give us the right amount of context for us to go back into the model and make improvements.

Tips and Tricks

Asking the right questions is key to getting the response you need. Questions may need to change based on which documents you select or how many you select. For example, this excerpt from the Librarian looks at a single document from Baltimore Gas & Electric from 2021:

Q: What companies does this document mention?

A: Here is a list of the companies mentioned in the document: 1. Baltimore Gas and Electric Company 2. Exelon Corporation 3. Exelon Generation Company, LLC 4. Exelon BSC 5. ComEd 6. PECO 7. Pepco Holdings 8. Delmarva 9. ACE 10. PEPCO 11. Exelon Corp 12. EXGEN

Q: Which one of these companies is the reporting company?

A: The reporting company is Baltimore Gas and Electric Company.

Q: Which of the companies mentioned are in Maryland?

A: Here is a list of companies mentioned in the document that are located in Maryland 1. Baltimore Gas and Electric Company - 2 Center Plaza, 110 West Fayette Street, Baltimore, MD 21201

The conversation is very basic but accurate and tends to focus on the universe of companies mentioned in BG&E’s filing. However, let's ask the same question from 10 documents from various companies in 2021.

Q: What companies does this document mention?

A: Here is a list of the companies mentioned in the documents: 1. The United Illuminating Company 2. AVANGRID Service Company 3. New York State Electric & Gas Corporation 4. Rochester Gas and Electric Corporation 5. Central Maine Power Company 6. UIL Holdings Corporation 7. Connecticut Natural Gas Corporation 8. The Southern Connecticut Gas Company9. Idaho Power Company 10. BANK OF NEW YORK 11. BLOOMBERG FINANCE LP 12. BROADRIDGE FINANCIAL SOLUTIONS 13. BUSINESS WIRE INC 14. D F KING & COMPANY INC 15. DEUTSCHE BANK TRUST CO 16. EQ SHAREOWNER SERVICES 17. MARKIT NORTH AMERICA INC 18. MODERN NETWORKS IR, LLC 19. MOODY'S ANALYTICS INC 20. NASDAQ CORPORATE SOLUTIONS LLC 21. NEW YORK STOCK EXCHANGE I 22. Q4 INC 23. RIVEL RESEARCH GROUP INC 24. SIDOTI & COMPANY LLC 25. Alabama Power Company 26. Align Energy, Inc. 27. Arges Incorporated 28. AlaDyn Holdings, LLC 29. QuantHub, LLC 30. Techstars Corporate Partner 2017 LLC 31. Shipshape Solutions, Inc 32. HD Data, Inc 33. Observation Without Limits, LLC 34. Alabama Property Company 35. Omega Realty Holdings V, LLC 36. Southeast Opportunity Zone Fund I, LLLP 37. LakeTown, LLC 38. LakeTown Stillwood, LLC 39. LakeTown Mills Creek, LLC 40. LakeTown Kennebec, LLC41. LakeTown Coosa Run, LLC 42. LakeTown Talisi, LLC 43. Reese Street, LLC 44. PDC SPE, LLC 45. PDC Member, LLC 46. PDC Holdings, LLC 47. 112 14th Street South Holdings, LLC 48. 113 13th Street South Holdings, LLC 49. 230 2nd Avenue South Holdings, LLC 50. 1200 2nd Avenue South Holdings, LLC 51. Hesco Parkside, LLC 52. 1209 1st Avenue South Holdings, LLC 53. Powell Steam Plant, LLC 54. 1301 1st Avenue Holdins, LLC 55. 1227 1st Avenue South Holdings, LLC 56. Southern Electric Generating Company 57. Xcelerate Holdings LLC

Right off the bat you get a larger list of companies, which makes sense since we selected 10 documents. However, when the Librarian gave us the list of relevant documents, it only gave us filings from The United Illuminating Company, Idaho Power Company, and Alabama Power Company. Other companies we had selected like Florida Power & Light and San Diego Gas & Electric were not mentioned. It’s also hard to know which companies are associated with a particular reporting company. Why did the Librarian give a response like this?

Our AI is regulated by the engine we run it on, and our engine only has so much computing power available at one time. We regulate this through what are called “tokens,” so each time you send the Librarian a question, it has a certain number of tokens it has to work with. Its job is to figure out how it can divvy-up those tokens onto pieces of a document and give it back to you in an answer. This is done to keep the conversation concise and understandable. It’s also used to protect our system from being overloaded with too many questions and giving too many tokens in a single response i.e. we don’t want the librarian to ramble and go on a tangent.

This means that asking the right questions, for the right amount of documents, will give you the best results possible. It may take some experimenting to get it right. Don’t get frustrated if the answer seems too basic or not detailed enough for what you intended. You may need to adjust the documents you selected, or adjust the question to be more specific.

Here are some examples of good and bad questions to ask for a small list of documents versus a larger list of documents. Some questions are good for smaller lists of documents but bad for larger lists and vice versa.

Small list (1-3 documents)

- Good

- What was the proposed return on equity for the rate case by X company in 2020?

- Why: this question is great if you know which company is doing a rate case, but don’t know the particulars. You can ask a more direct question and get a more direct response. Very good question if you’re looking at a single document.

- Give me a summary of the litigation between the company and the regional court on environmental law violations and the current status of the case.

- Why: This is great if you know which company is engaged in a lawsuit, and want to understand how the lawsuit has changed overtime by selecting the company’s form 1’s from 2020, 2021, and 2022.

- Bad

- Does this document mention a rate case?

- Why: That question is better for a list of larger documents, allowing you to clarify which company may be engaged in a rate case. If you select only one or two documents, asking that question and getting a “There is no mention of a rate case” will quickly get frustrating.

Large list (3+ documents)

- Good

- Which of these companies mentions wildfire mitigation expenses in their report to the FERC?

- Why: this question is great for a large set of documents so it can help you identify the right company. You’re giving a broad question that only requires a short answer of “It’s this company.” From there, you can select filings from that company and ask more specific questions.

- Bad

- Give me a summary of Rate Case number 123456

- Why: This is too specific a request. The librarian will take time to go through all the documents to get the right result, and may confuse multiple rate cases and give you too general an answer. This question is more appropriate for a single document you know contains references to that rate case.

- Give me a list of the companies mentioned in the documents.

- Why: there are going to be too many companies to mention, and the Librarian will cap the number of companies it sends you.

This is just a few sample questions. The key for a positive user experience will be willingness to experiment, and being patient with the Librarian if the responses aren’t exactly what you’d like. It may just require a little tweaking of your question, or the Librarian may improve over time as users continue to give feedback.

About the HData Librarian

Artificial Intelligence (AI) is a popular topic of discussion and the facts and myths surrounding it can cloud the use cases for it. That said, we want to provide a little bit of information on how we developed the HData AI Librarian, what it can and can't do, and a bit of how it works.

Simply put, AI is a technology that allows computers and machines to think and act in a way that mimics people. In the first half of the 20th century, British logician and computer pioneer Alan Turing started developing and experimenting with smart machines. These machines have been iterated on and form the basis of computers and much of the AI we know today. Artificial Intelligence is continuing to evolve, and as it does, we at HData are focused on harnessing its capability to ensure energy companies can be more efficient in understanding, analyzing, and deriving insights from the tens of thousands of FERC reports that are accessible through our platform.

HData's Librarian is what is called a "Generative AI." This means that it generates responses when given a prompt by someone. We use text-based responses, but the Generative AI family also includes image or video generation. That being said, Generative AI, and more specifically GPT,has its limitations. Despite being one of the most advanced models available, it is still imperfect in understanding and interpreting human language. One of the main limitations is that it heavily relies on the quality and quantity of data it is trained on.

Thus, if the training data is biased, incomplete, or faulty, it could lead to inaccuracies in its responses. Additionally, systems like ChatGPT may struggle with context-specific knowledge or tasks requiring more complex reasoning, such as advanced problem-solving or critical thinking. That is why HData continues to test different ways of transforming how we store the FERC reports in our data stores while maintaining their actual context and content. We are also trying techniques such as Semantic Search and different Large Language Models to optimize how AI understands our domain-specific documents.

This is also why we implemented a feedback mechanism accessible to you. The best way for us to train our model is to get actual user feedback. We also link back to any documents the AI uses to create the answer it gives you so you can check its work. You can trust the Librarian to give you the best response it can, but it is always important to verify the work. Much like we can claim someone "is only human," we ask that the same courtesy be extended to our Librarian.

We know that AI is not a silver bullet and the journey is far from over. We must be prudent when building ChatGPT-like capabilities for users to better understand FERC reports. After all, many of our users are the key individuals carefully stewarding this data from each organization to the FERC. Data is meant to be used, and we are continuously working to make it even more accessible.

If you wish to understand more about our AI, you can read a post by our Chief Technology Officer, Yuval Lubowich, on how we use AI and our logic in developing it. Some of the "about" section uses excerpts from his post.